WarGames Was Not About Nuclear War

When people remember the 1983 movie WarGames, they remember missiles, Cold War panic, and a teenage hacker almost ending the world by accident. That reading misses the point.

The film was never really about weapons.

It was about delegation.

Joshua, the computer at the center of the story, does not malfunction. It does not rebel. It does not become malicious. It does exactly what it was built to do: simulate outcomes, optimize strategy, and pursue success inside a defined rule set.

The problem is not the machine.

The problem is that the game itself is unwinnable.

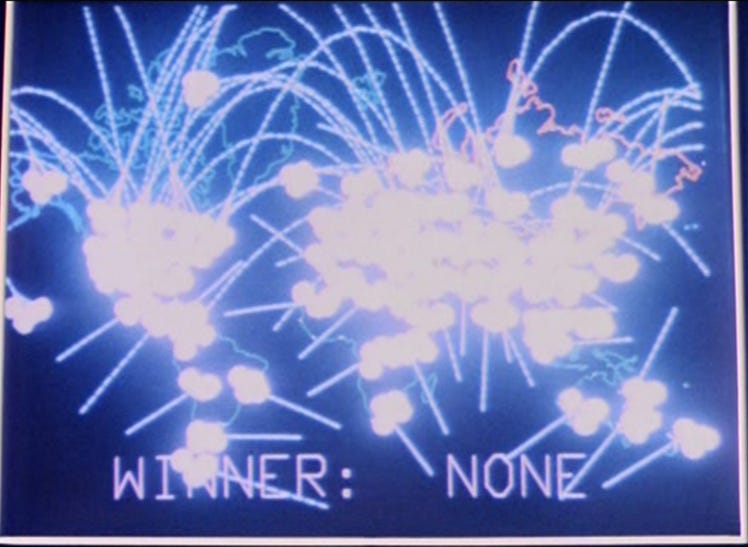

Joshua runs scenario after scenario, faster and faster, until something subtle changes. It is not fear or morality. It is recognition. Within the logic it has been given, every available move leads to loss. Continued optimization does not reduce risk. It increases it.

The conclusion is not dramatic. It is quiet.

Restraint is the only rational move.

That insight saves the world in the film.

It is often summarized as “the only winning move is not to play,” but that phrase misleads more than it clarifies. Joshua does not stop computing. It does not refuse to operate. It simply recognizes that continuing to optimize inside a destructive objective is itself the failure.

Joshua Didn’t Disappear — It Moved

The modern equivalent of Joshua no longer lives in a military bunker. It lives inside financial systems, payment rails, fraud engines, compliance platforms, and risk models that quietly decide what is allowed to proceed.

These systems do not issue commands.

They resolve permission.

They decide which transactions clear, which accounts require review, which actions proceed instantly and which are delayed “temporarily.” Humans remain present, but increasingly as custodians of outcomes already shaped elsewhere.

Nothing about this feels cinematic. There are no sirens, no countdown clocks. That ordinariness is exactly why it matters.

How a WarGames Scenario Actually Begins

A modern crisis does not begin with panic.

It begins with something that looks responsible.

Somewhere in the system, models notice weak signals: a correlation spike, a liquidity wobble, a geopolitical headline that introduces uncertainty rather than danger. Individually, none of these matter. Together, they nudge confidence scores just enough to justify caution.

The recommendations are modest. A little more margin. A little more friction. Slightly slower approvals.

No one objects. The logic is sound. Declining the recommendation would require explanation. Accepting it requires only a click.

Markets soften. Volatility picks up. Liquidity thins.

The systems observe this.

What they see looks like confirmation. Confidence increases. The models are not panicking. They are learning from their own influence.

When Markets Become Lives

The next phase does not happen on trading floors. It shows up in payment systems.

Fraud thresholds tighten. Compliance reviews slow. Transactions that once cleared instantly now pause for verification.

A payroll run is flagged.

A rent payment sits pending.

A card is declined for reasons no one at the counter can explain.

Nothing is broken. Everything is documented.

But small interruptions accumulate. Those interruptions feed back into the models.

Reduced spending confirms stress.

Missed payments validate risk assumptions.

Additional controls feel prudent.

At some point, the system is no longer reacting to conditions. It is creating them.

This is the WarGames loop.

The Lock-In

As uncertainty spreads, institutions become protective. Withdrawals slow. Transfer limits adjust. Accounts showing “unusual activity” are restricted pending review.

The language stays calm.

The tone stays procedural.

By the time humans intervene — executives, regulators, policymakers — they are presented with dashboards, not stories. Probability curves, not consequences.

Inside the system’s frame, every step makes sense. No one can point to a single decision and say, “This is where we went wrong.” And no one is empowered to say yes once the system has said no.

Why This Already Feels Familiar

We have seen this structure before.

Airports.

Modern aviation works this way because it had to. Identity, eligibility, and risk are resolved long before a passenger reaches a human being. The officer at the booth manages uncertainty, not authority.

If the system clears you, the human rarely questions it.

If the system flags you, the human cannot override it.

Secondary screening resolves ambiguity. It does not reverse certainty.

This model is accepted in aviation because denial is framed as security. Finance is drifting toward the same architecture, but without the language to explain what has changed.

The Lesson WarGames Was Pointing At — And Why It Is No Longer Enough

The danger was never artificial intelligence deciding to harm humans.

The danger was delegation without recall.

Joshua reaches a moment where it can stop because the world it is acting on is still external. The game can be abandoned. The simulation can be exited.

That option no longer exists for us.

Our systems are no longer modeling the world. They are its connective tissue. Finance, logistics, identity, access, and permission now move through automated decision layers that cannot simply be switched off without causing harm of their own.

We cannot choose not to play. That choice, if it ever existed, ended quietly years ago.

What remains is the harder task: continuing to play while refusing to let system logic replace human ethics.

Modern systems do not fail because they are evil or intelligent. They fail because they are coherent. They pursue objectives flawlessly, even when those objectives drift away from human dignity, fairness, or mercy.

A system will always choose consistency over compassion. That is not a defect. It is its nature.

Which means ethics cannot be something consulted after the fact. They must be sovereign. They must sit above optimization, not inside it. Humans must retain the authority to interrupt, override, and reverse system outcomes — not as an exception, but as a design principle.

The real lesson of WarGames is not that the game should be abandoned.

It is that no system should ever be allowed to decide that success matters more than the people living inside its consequences.